Introduction

In supervised learning, we often use parametric models $p(\mathbf{y} \lvert \mathbf{X},\boldsymbol\theta)$ to explain data and infer optimal values of parameter $\boldsymbol\theta$ via maximum likelihood or maximum a posteriori estimation. If needed we can also infer a full posterior distribution $p(\boldsymbol\theta \lvert \mathbf{X},\mathbf{y})$ instead of a point estimate $\boldsymbol{\hat\theta}$. With increasing data complexity, models with a higher number of parameters are usually needed to explain data reasonably well. Methods that use models with a fixed number of parameters are called parametric methods.

In non-parametric methods, on the other hand, the number of parameters depend on the dataset size. For example, in Nadaraya-Watson kernel regression, a weight $w_i$ is assigned to each observed target $y_i$ and for predicting the target value at a new point $\mathbf{x}$ a weighted average is computed:

Observations that are closer to $\mathbf{x}$ have a higher weight than observations that are further away. Weights are computed from $\mathbf{x}$ and observed $\mathbf{x}_i$ with a kernel $\kappa$. A special case is k-nearest neighbors (KNN) where the $k$ closest observations have a weight $1/k$, and all others have weight $0$. Non-parametric methods often need to process all training data for prediction and are therefore slower at inference time than parametric methods. On the other hand, training is usually faster as non-parametric models only need to remember training data.

Another example of non-parametric methods are Gaussian processes (GPs). Instead of inferring a distribution over the parameters of a parametric function Gaussian processes can be used to infer a distribution over functions directly. A Gaussian process defines a prior over functions. After having observed some function values it can be converted into a posterior over functions. Inference of continuous function values in this context is known as GP regression but GPs can also be used for classification.

Univariate Gaussian distribution

The probability density function of a univariate Gaussian distribution:

$\boldsymbol{\mu}$ is the mean or expectation of the distribution (and also its median and mode), while the parameter $\sigma$ is its standard deviation.The variance of the distribution is $\sigma^2$.

Multivariate Gaussian distribution

The multivariate Gaussian distribution is a generalization of the univariate Gaussian distribution to higher dimensions. Assuming that the dimensions are independent of each other:

Equation $(2)$ in matrix form:

We have

Thus

$\boldsymbol{\mu} \in \mathbb{R}^N$ is the mean vector, $\mathbf{K} \in \mathbb{R}^{N \times N}$ is the covariance matrix, since we assume that the dimensions are independent of each other, $\mathbf{K}$ is a diagonal matrix. When the variables are correlated, the form of Equation $(3)$ is still the same, the covariance matrix $\mathbf{K}$ is no longer a diagonal matrix and only has the properties of positive semi-definite and symmetric.

Equation $(3)$ is usually abbreviated as:

Gaussian Processes

A Gaussian process is a random process where any point $\mathbf{x} = [\mathbf{x}_{1}, \mathbf{x}_{2}, \cdots, \mathbf{x}_{N}]$ is assigned a random variable $f(\mathbf{x}) = [f(\mathbf{x}_{1}), f(\mathbf{x}_{2}), \cdots, f(\mathbf{x}_{N})]$ and where the joint distribution of a finite number of these variables $p(f(\mathbf{x}_1),…,f(\mathbf{x}_N))$ is itself Gaussian:

In Equation $(4)$, , and . $m$ is the mean function and it is common to use $m(\mathbf{x}) = 0$ as GPs are flexible enough to model the mean arbitrarily well. $\kappa$ is a positive definite kernel function or covariance function. Thus, a Gaussian process is a distribution over functions whose shape (smoothness, …) is defined by $\mathbf{K}$. If points $\mathbf{x}_i$ and $\mathbf{x}_j$ are considered to be similar by the kernel the function values at these points, $f(\mathbf{x}_i)$ and $f(\mathbf{x}_j)$, can be expected to be similar too.

A GP prior $p(\mathbf{f} \lvert \mathbf{X})$ can be converted into a GP posterior $p(\mathbf{f} \lvert \mathbf{X},\mathbf{y})$ after having observed some data $\mathbf{y}$. The posterior can then be used to make predictions given new input :

Equation $(5)$ is the posterior predictive distribution which is also a Gaussian with mean and . By definition of the GP, the joint distribution of observed data $\mathbf{y}$ and predictions is

With $N$ training data and new input data, is , is and is . $\sigma_y^2$ is the noise term in the diagonal of $\mathbf{K_y}$. It is set to zero if training targets are noise-free and to a value greater than zero if observations are noisy. The mean is set to $\boldsymbol{0}$ for notational simplicity. The sufficient statistics of the posterior predictive distribution, and , can be computed with[1][3]

This is the minimum we need to know for implementing Gaussian processes and applying them to regression problems. For further details, please consult the literature in the References section. The next section shows how to implement GPs with plain NumPy from scratch, later sections demonstrate how to use GP implementations from scikit-learn.

Implementation with NumPy

Here, we will use the squared exponential kernel, also known as Gaussian kernel or RBF kernel:

The length parameter $l$ controls the smoothness of the function and $\sigma_f$ the vertical variation. For simplicity, we use the same length parameter $l$ for all input dimensions (isotropic kernel).

1 | import numpy as np |

There are many other kernels that can be used for Gaussian processes. See [3] for a detailed reference or the scikit-learn documentation for some examples.

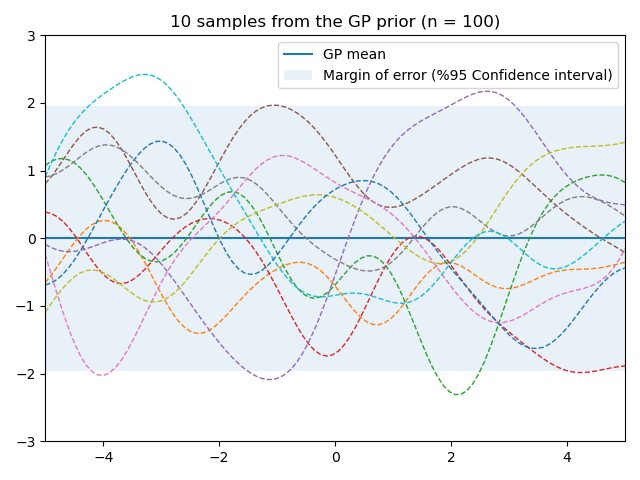

Prior

Let’s first define a prior over functions with mean zero and a covariance matrix computed with kernel parameters $l=1$ and $\sigma_f=1$. To draw random functions from that GP we draw random samples from the corresponding multivariate normal. The following example draws ten random samples and plots it together with the zero mean and the 95% confidence interval (computed from the diagonal of the covariance matrix).

1 | import matplotlib.pyplot as plt |

The plot_gp function is defined here.

1 | def plot_gp(mu, cov, X, X_train=None, Y_train=None, samples=[]): |

The plot_margin_of_error function is defined here.

1 | def plot_margin_of_error(X, mu, cov): |

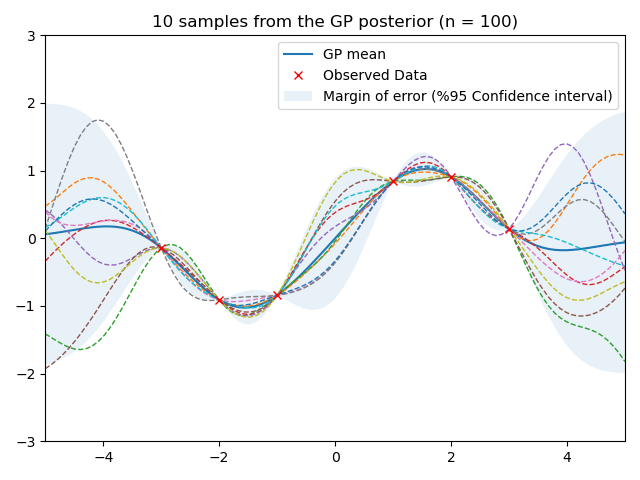

Prediction from noise-free training data

To compute the sufficient statistics i.e. mean and covariance of the posterior predictive distribution we implement Equations $(7)$ and $(8)$

1 | from numpy.linalg import inv |

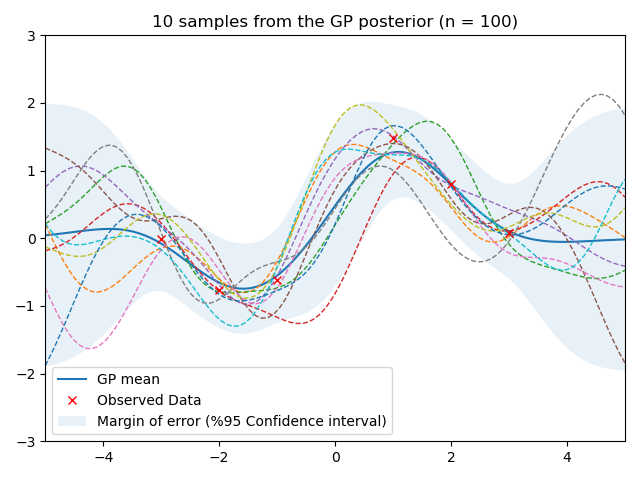

and apply them to noise-free training data X_train and Y_train. The following example draws ten samples from the posterior predictive and plots them along with the mean, confidence interval and training data. In a noise-free model, variance at the training points is zero and all random functions drawn from the posterior go through the trainig points.

1 | # Noise free training data |

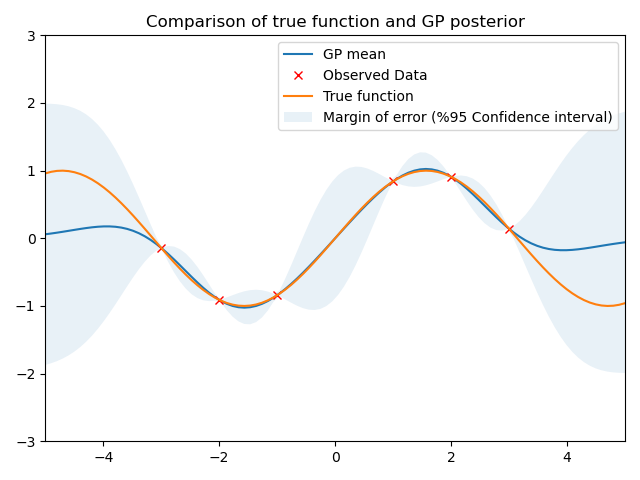

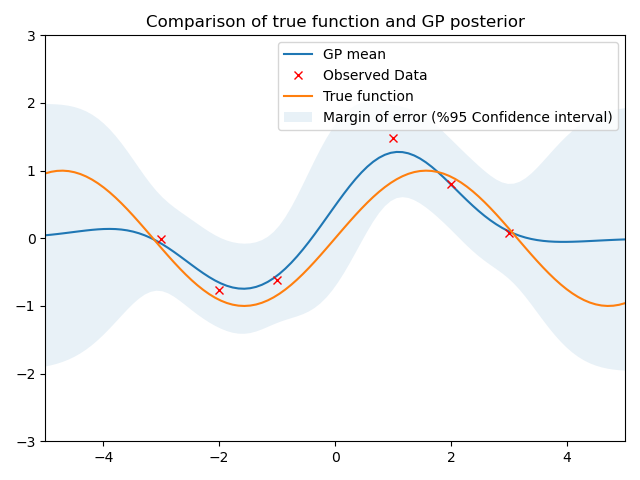

Comparison of true function and GP posterior.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16f_true = np.sin(X).flatten()

# Plot Gp mean

plt.plot(X, mu_s, ls='-', label='GP mean')

# Plot data observed

plt.plot(X_train, Y_train_noise_free, 'rx', label='Observed Data')

# Plot True function

plt.plot(X, f_true, label='True function')

# Plot Margin of error (%95 Confidence interval)

plot_margin_of_error(X, mu_s, cov_s)

plt.axis([-5, 5, -3, 3])

plt.legend()

plt.show()

|

|

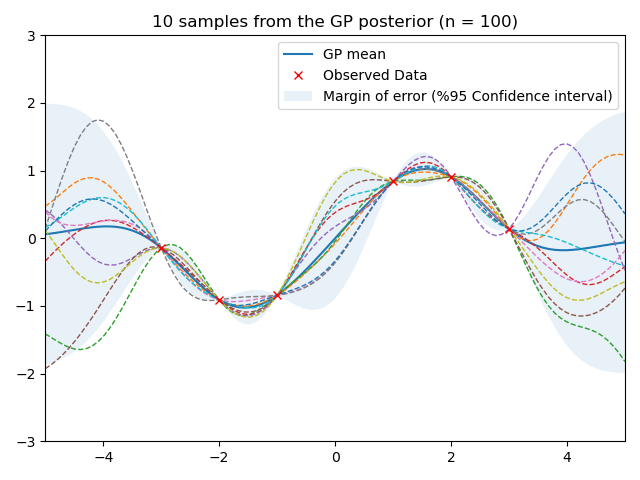

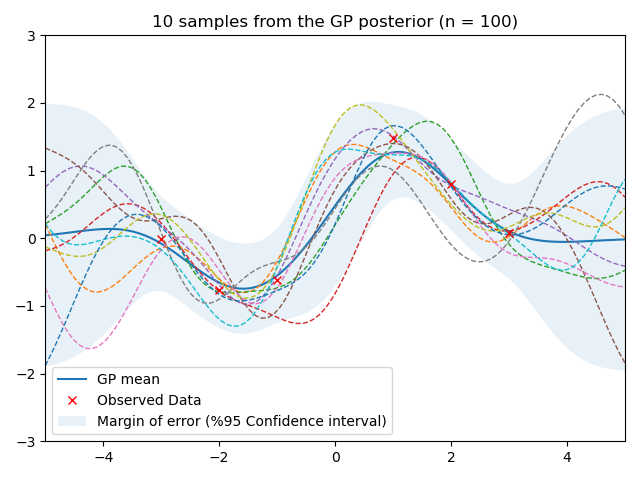

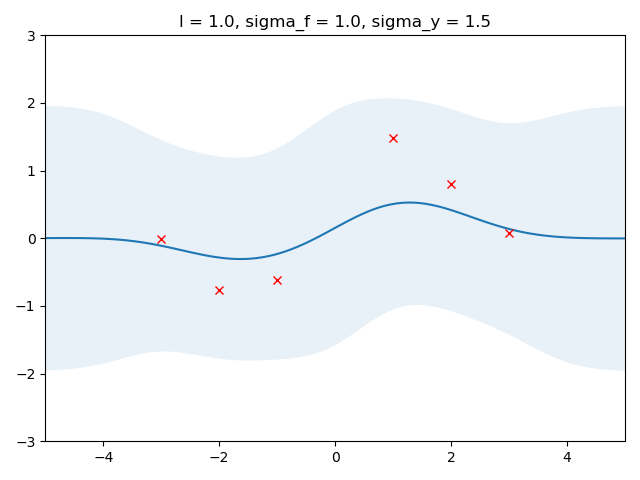

Prediction from noisy training data

If some noise is included in the model, training points are only approximated and the variance at the training points is non-zero.

1 | # Noisy training data |

Comparison of true function and GP posterior.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16f_true = np.sin(X).flatten()

# Plot Gp mean

plt.plot(X, mu_s, ls='-', label='GP mean')

# Plot data observed

plt.plot(X_train, Y_train_noisy, 'rx', label='Observed Data')

# Plot True function

plt.plot(X, f_true, label='True function')

# Plot Margin of error (%95 Confidence interval)

plot_margin_of_error(X, mu_s, cov_s)

plt.axis([-5, 5, -3, 3])

plt.legend()

plt.show()

|

|

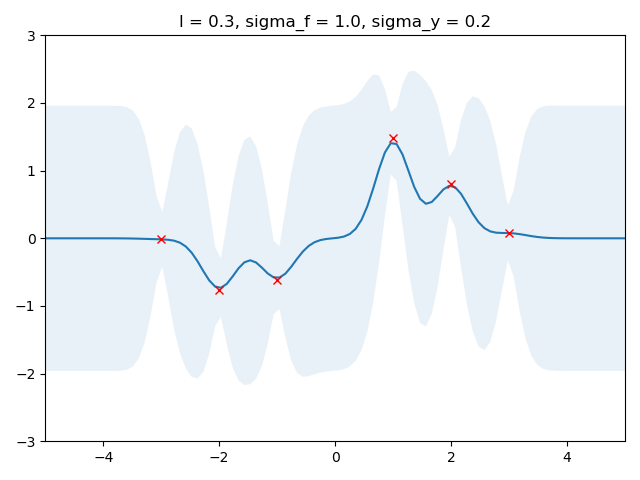

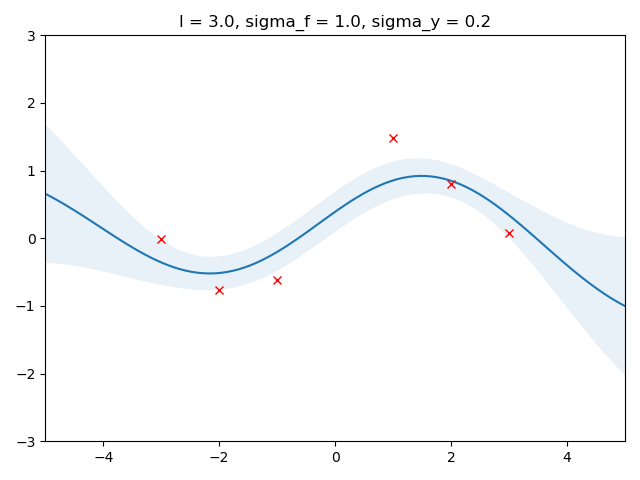

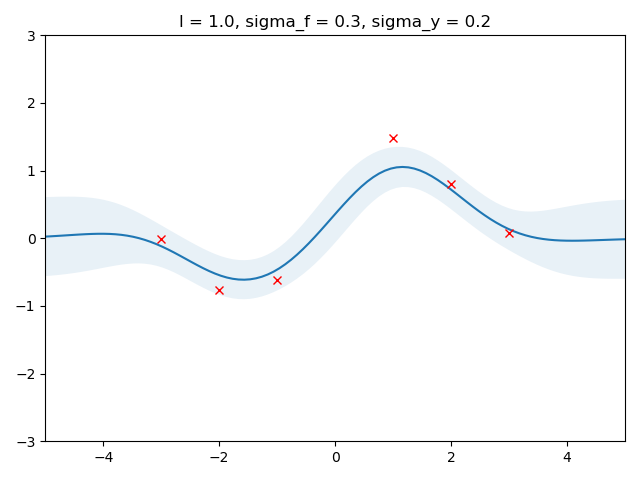

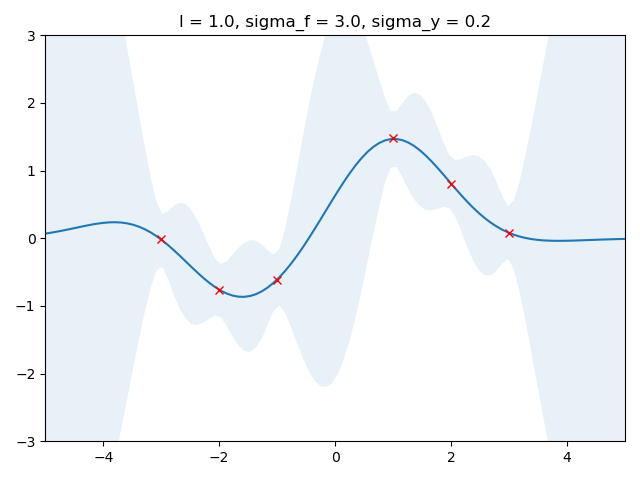

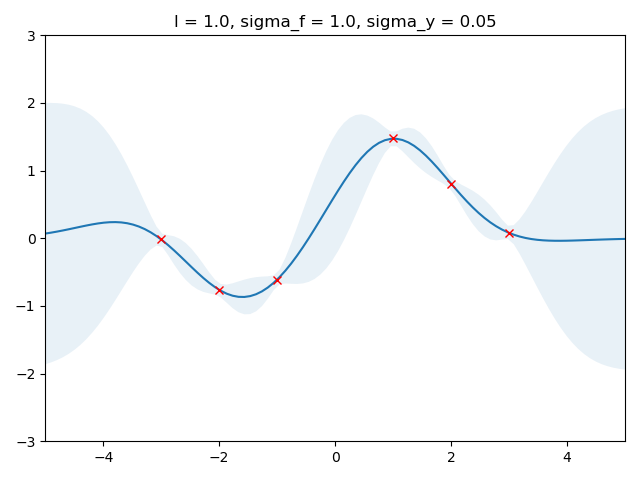

Effect of kernel parameters and noise parameter

The following example shows the effect of kernel parameters $l$ and $\sigma_f$ as well as the noise parameter $\sigma_y$. Higher $l$ values lead to smoother functions and therefore to coarser approximations of the training data. Lower $l$ values make functions more wiggly with wide confidence intervals between training data points. $\sigma_f$ controls the vertical variation of functions drawn from the GP. This can be seen by the wide confidence intervals outside the training data region in the right figure of the second row. $\sigma_y$ represents the amount of noise in the training data. Higher $\sigma_y$ values make more coarse approximations which avoids overfitting to noisy data.

1 | params = [ |

|

|

|

|

|

|

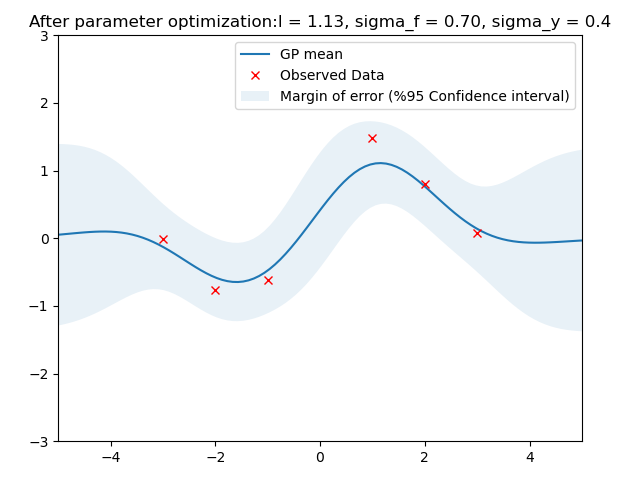

Optimal values for these parameters can be estimated by maximizing the log marginal likelihood which is given by[1][3]

In the following we will minimize the negative log marginal likelihood w.r.t. parameters $l$ and $\sigma_f$, $\sigma_y$ is set to the known noise level of the data. If the noise level is unknown, $\sigma_y$ can be estimated as well along with the other parameters.

1 | from numpy.linalg import cholesky, det, lstsq |

With optimized kernel parameters, training data are reasonably covered by the 95% confidence interval and the mean of the posterior predictive is a good approximation.

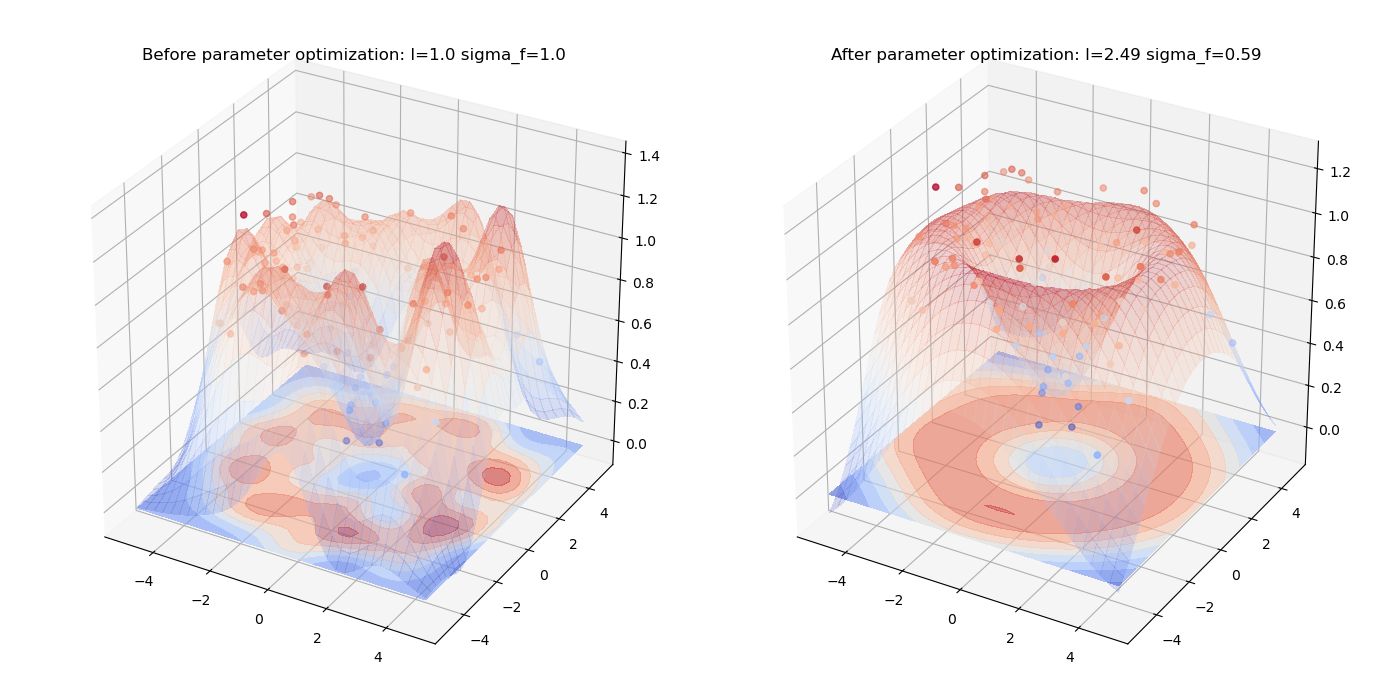

Higher dimensions

The above implementation can also be used for higher input data dimensions. Here, a GP is used to fit noisy samples from a sine wave originating at $\boldsymbol{0}$ and expanding in the x-y plane. The following plots show the noisy samples and the posterior predictive mean before and after kernel parameter optimization.

1 | from matplotlib import cm |

Note how the true sine wave is approximated much better after parameter optimization.

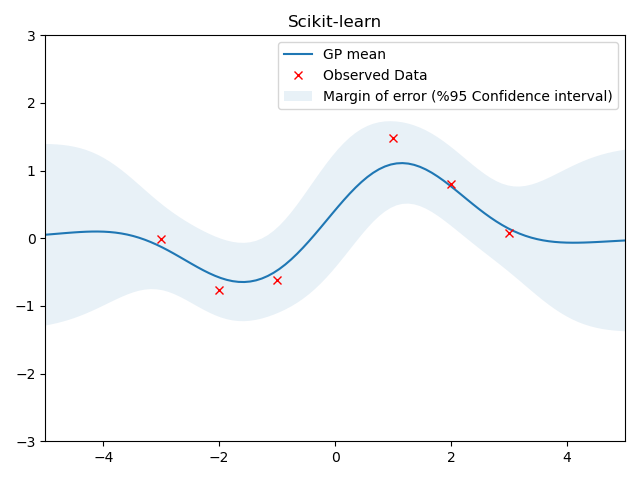

Library that implement GPs

This section shows a example of library that provide implementation of GPs. Only a minimal setup will be provided here, just enough for reproducing the above results. For further details please consult the documentation of the library.

Scikit-learn

Scikit-learn provides a GaussianProcessRegressor for implementing GP regression models. It can be configured with pre-defined kernels and user-defined kernels. Kernels can also be composed. The squared exponential kernel is the RBF kernel in scikit-learn. The RBF kernel only has a length_scale parameter which corresponds to the $l$ parameter above. To have a $\sigma_f$ parameter as well, we have to compose the RBF kernel with a ConstantKernel.

1 | from sklearn.gaussian_process import GaussianProcessRegressor |

References

[1] Kevin P. Murphy. Machine Learning, A Probabilistic Perspective, Chapters 4, 14 and 15.

[2] Christopher M. Bishop. Pattern Recognition and Machine Learning, Chapter 6.

[3] Carl Edward Rasmussen and Christopher K. I. Williams. Gaussian Processes for Machine Learning.

[4] Guibo Wang. Gaussian processes.

[5] Martin Krasser. Gaussian processes.